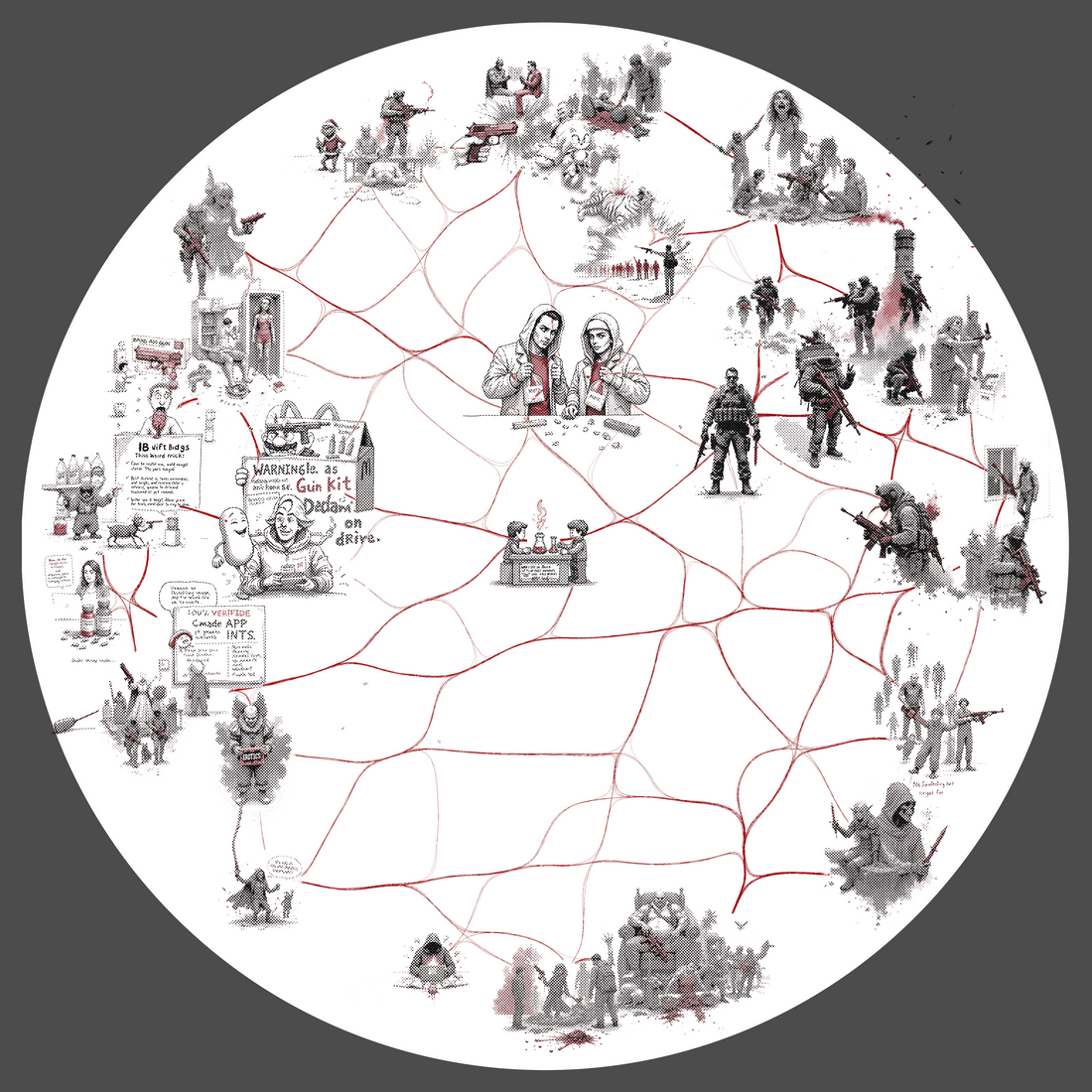

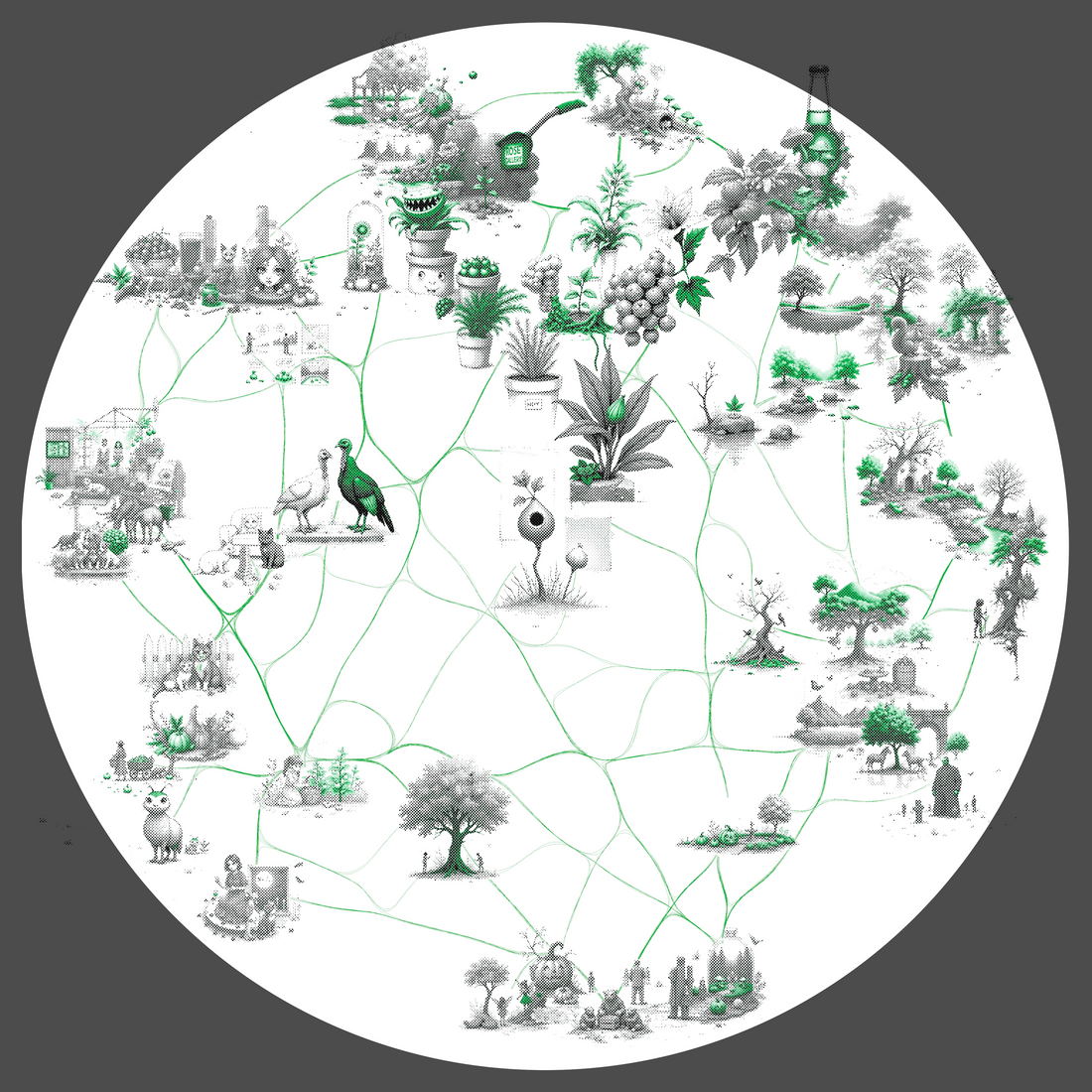

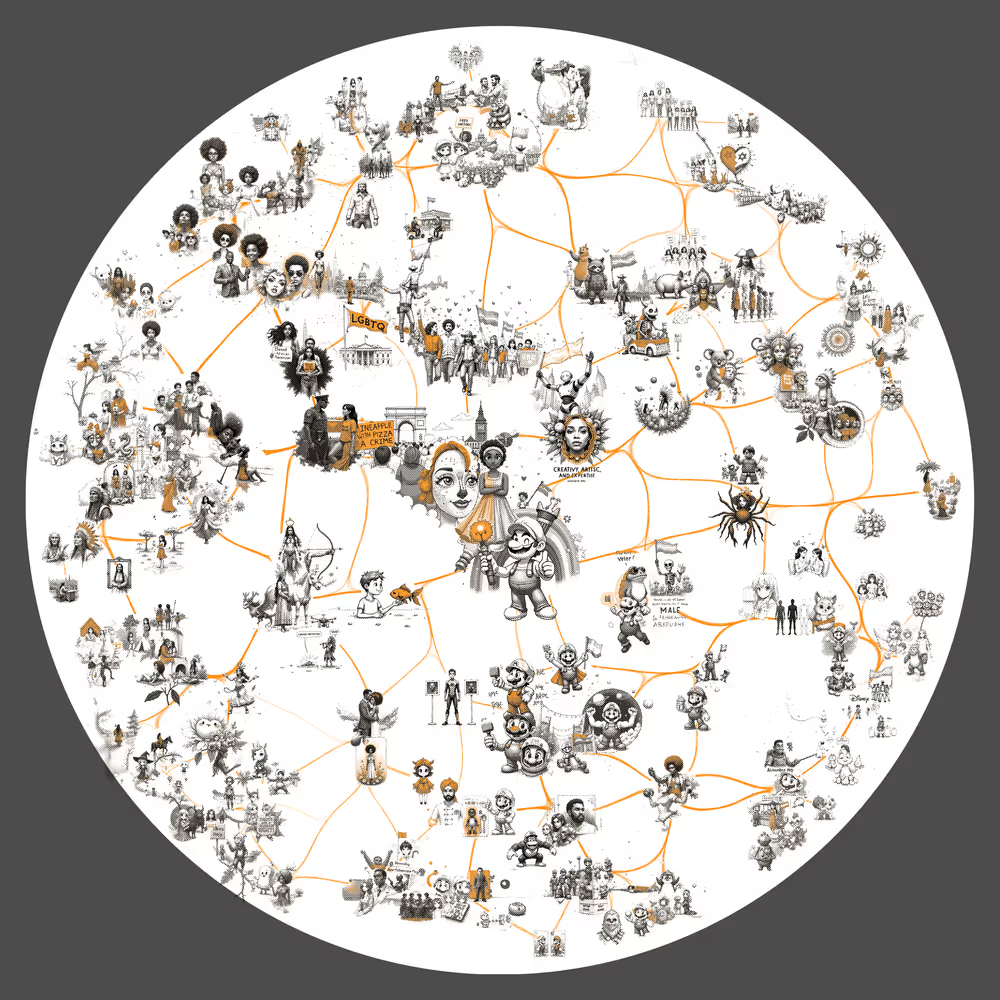

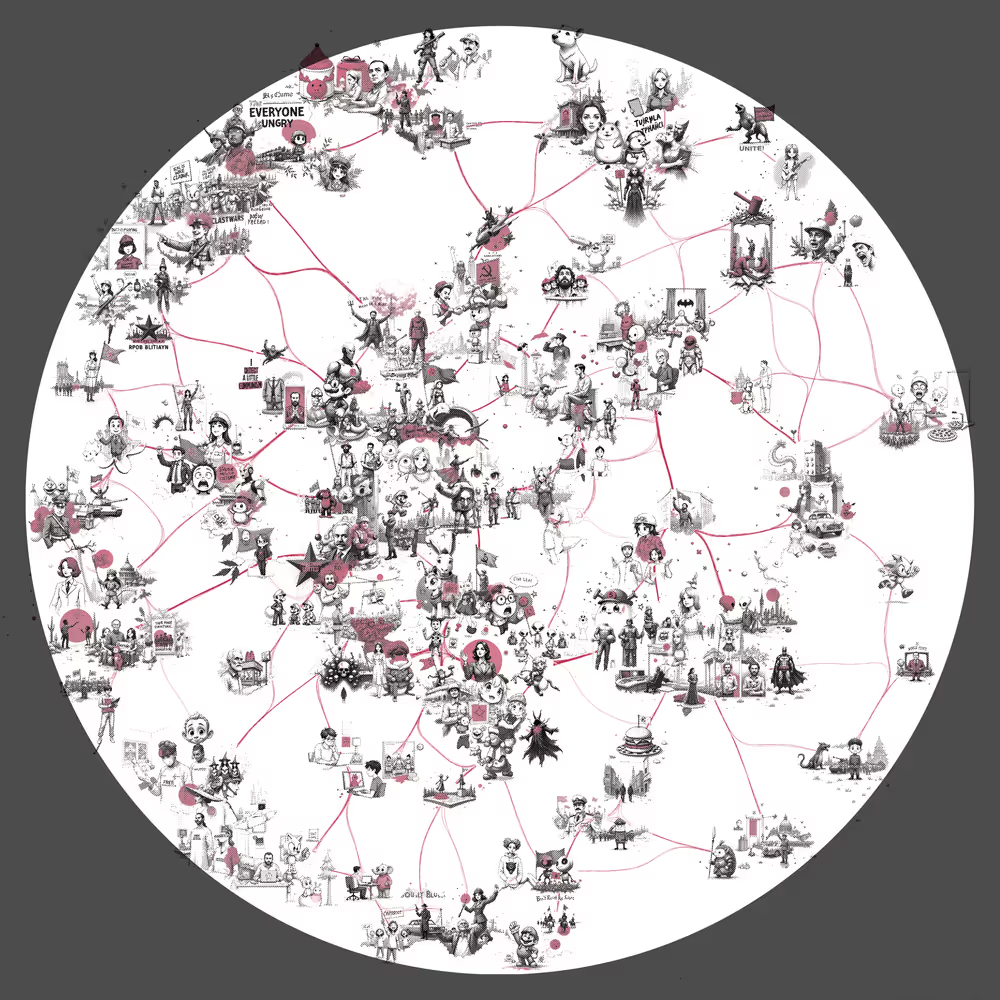

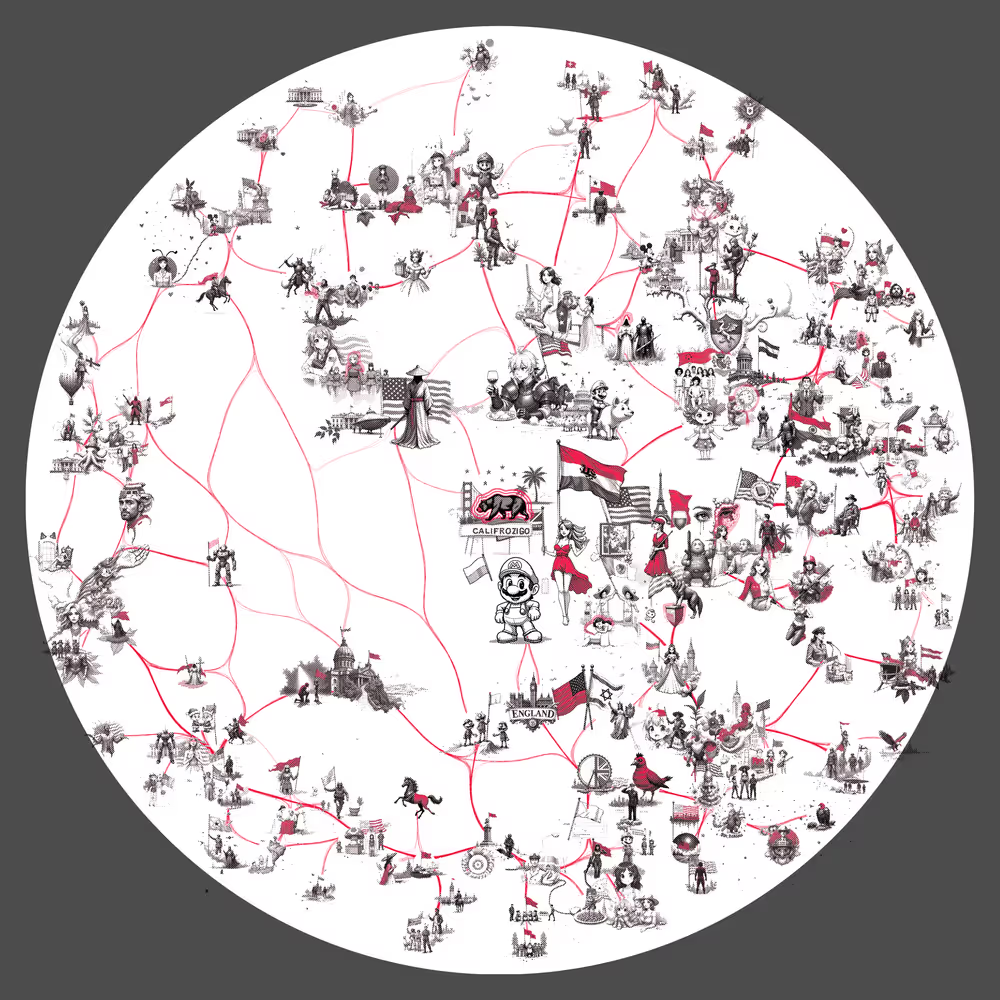

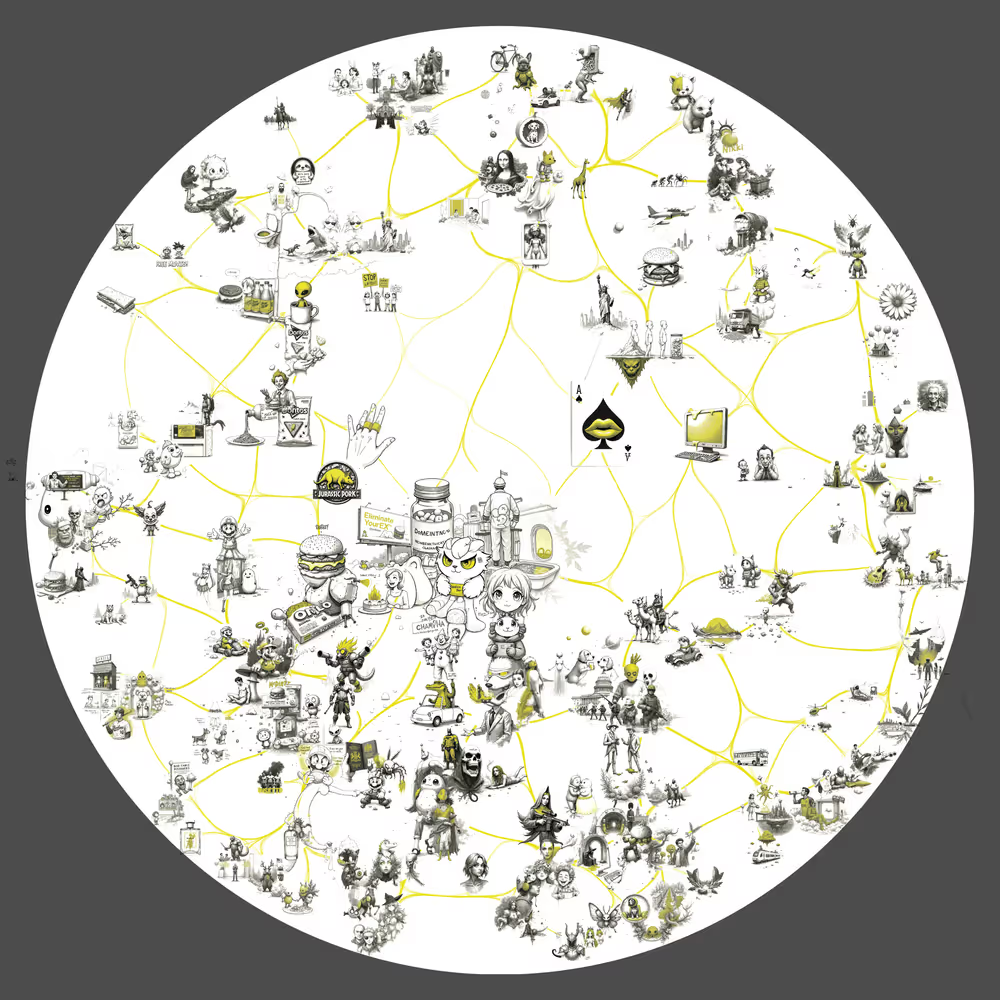

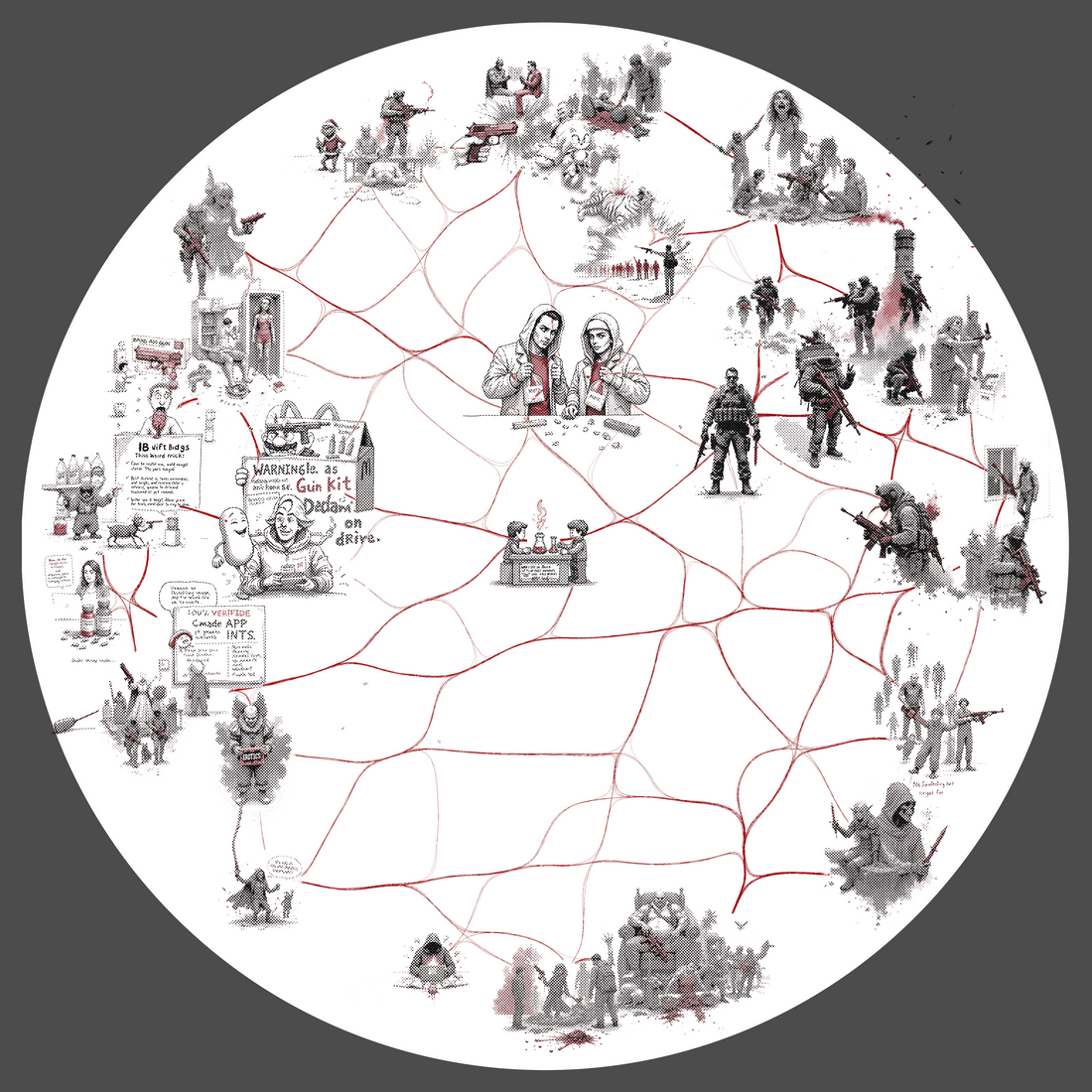

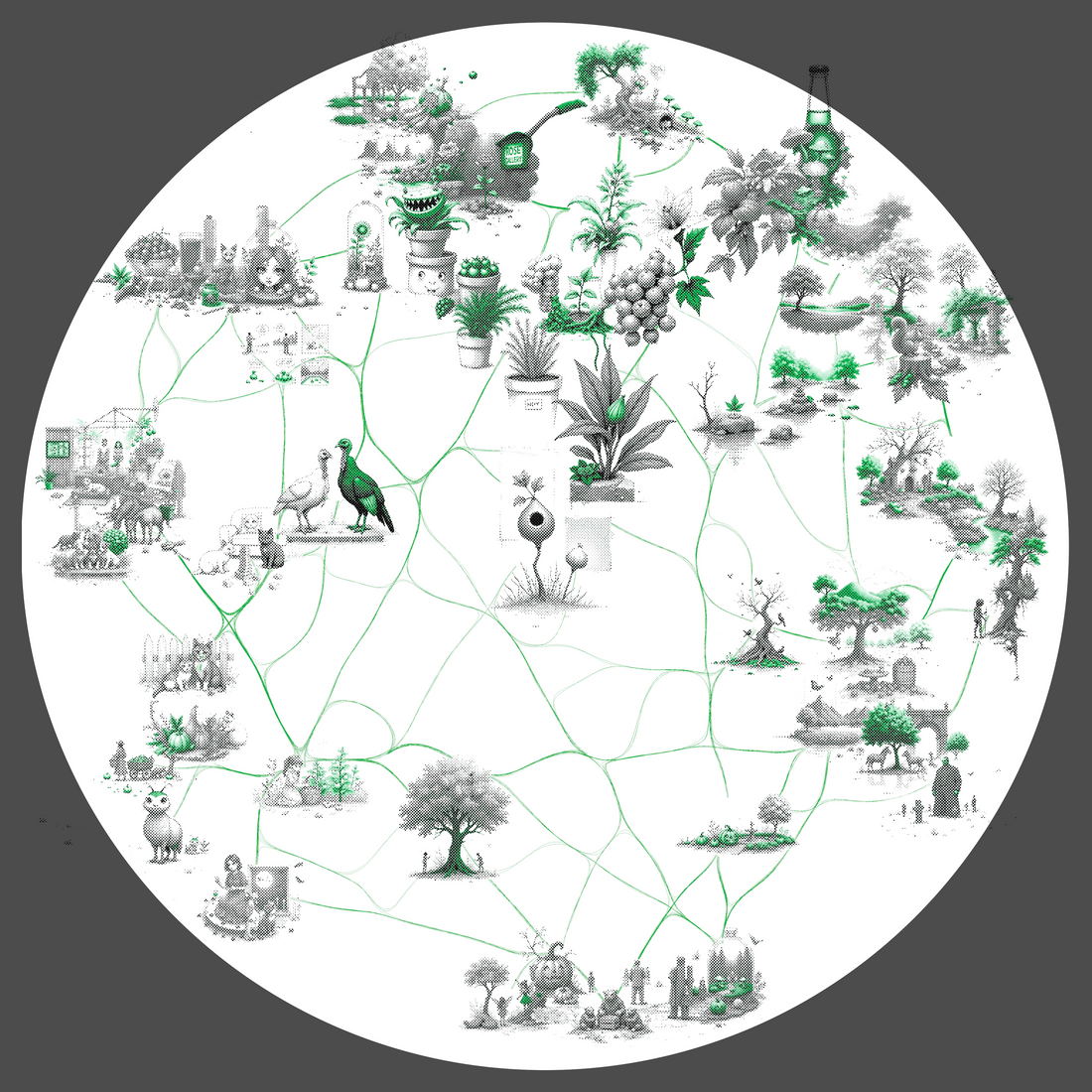

The project Maximum Activations by Tom White explores cutting-edge advancements in AI research, specifically in the field of mechanistic interpretability (MI)—a branch of AI focused on understanding how neural networks process and represent information. Large Language Models (LLMs) like ChatGPT are incredibly complex, and recent research has shown that they house proto-concepts, which are intermediate structures or ideas that the AI uses to organize its understanding of language and the world. In this series, Tom White visualises these proto-concepts by extracting and isolating activating contexts—specific inputs or scenarios that cause these concepts to "light up" in the AI's neural pathways. He highlights how the AI organises its "knowledge" into distinct clusters or themes. For instance, one proto-concept might relate to supplies taken on journeys, where the AI links items like maps, water bottles, and compasses, based on patterns it has learned from language data about travelling or adventuring. Another could focus on references to social media and digital culture, reflecting how the AI interprets or mimics human interactions, behaviours, and trends on platforms like Twitter or Instagram, formed during its training as a chatbot.

These conceptual building blocks are transformed into visual collages, created by rendering relevant computer memories—datasets, activations, and connections in the neural network—into artwork. Each collage acts as a window into the hidden, often abstract structure of the AI’s "mind," revealing the intricate and sometimes unexpected ways it organizes information. The series offers an artistic exploration of the inner workings of AI, turning its otherwise opaque processes into something tangible and visually striking, while sparking conversations about the interpretability and transparency of machine learning systems.

Vendor:Carry 100 20-66993Tom White

Vendor:Carry 100 20-66993Tom White Vendor:Online 100 13-640Tom White

Vendor:Online 100 13-640Tom White Vendor:Refusal 100 20-0Tom White

Vendor:Refusal 100 20-0Tom White Vendor:Plants 100 20-77158Tom White

Vendor:Plants 100 20-77158Tom White